CLI Agents: The New Standard of AI Coding

In this article, we will explore how AI assistance for coding has evolved. We will look at chat interfaces, autocomplete, IDE agents, and of course CLI agents. We will also discuss why MCP is useful and what it actually is. Using the CLI agent Claude Code as an example, we will explore how to use AI effectively for coding.

- CLI Agents: The New Standard of AI Coding

- CLI Agents Part 2: Claude Code Best Practices

- CLI Agents Part 3: Business Central MCP Server

Agenda

The evolution of AI coding tools

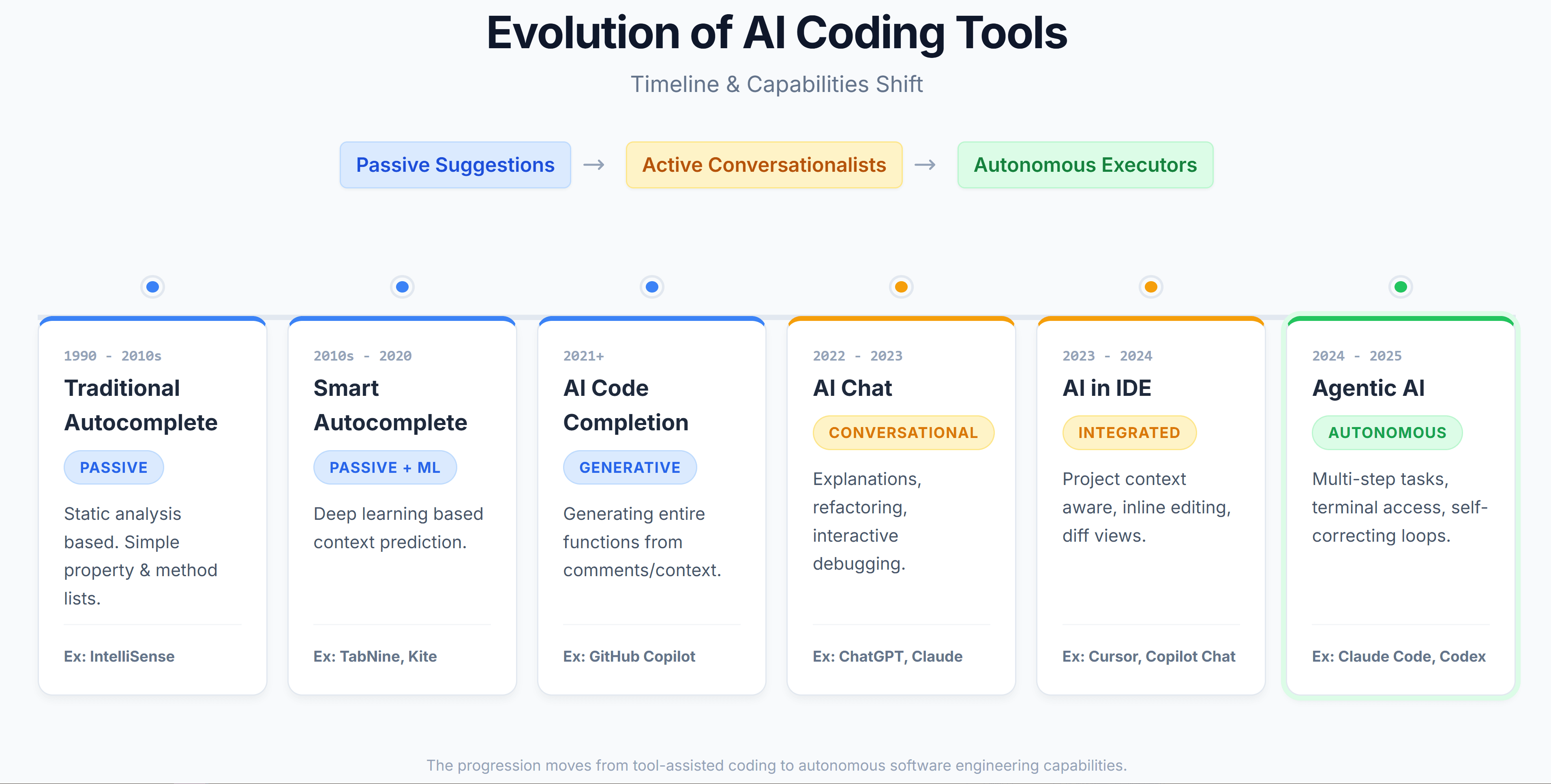

In reality, the evolution of coding tools began long before LLMs became popular. There was everything from entering programs manually on punched cards, to higher-level processor instructions via assembly, to compilers and interpreters, and so on. We will not go into that here, as it is beyond the scope of this article. Instead, we will focus on the more relevant history of how today’s AI tools have developed, without diving too deeply into the details.

One of the closest to AI tools for coding assistance was traditional autocomplete, such as IntelliSense. Based on static analysis, language libraries, and the capabilities of the Language Server Protocol (LSP), it offered code completion suggestions. We all still use this tool constantly in coding.

Later, this idea evolved in tools like TabNine, which enhanced static analysis with a new approach: deep learning. This was one of the first real uses of AI in coding assistance, where the current context of the code started to matter. It was still a very limited tool, but it was the beginning.

And of course, AI autocomplete, when GitHub Copilot appeared in 2021–2022, it was a completely different level, a real qualitative leap forward. The project context finally started to be taken into account, and the tool began suggesting entire functions based on it.

However, to be honest, every one of my attempts to use AI code autocomplete has ended with me turning these features off, because they only annoyed me. I know that many people feel differently, but this is my view. I do not want a tool to guess what I am trying to do for me, at least not until these features learn to read minds.

Then came the era of AI chat tools, which began with ChatGPT. A chat interface is still the most popular way to interact with LLMs. AI chats are used for many different purposes, including coding. Using an AI chat for coding is a completely different paradigm: we ask a specific question and request a concrete solution, trying to provide enough context to the LLM to obtain a more accurate answer. However, there are certain issues with using chat for coding, and we will look at them next.

The next stage of this evolution was AI integrated directly into the IDE, with Copilot in VS Code or tools like Cursor as good examples. They resolved the most obvious drawbacks of using an AI chat for coding. There was no longer any need to copy code into a chat window, the project context started to be taken into account, and feedback from the compiler and linter began to be used. All these factors further improved the coding experience and, one could say, marked another step up to a new level.

And finally, we are entering the era of agentic AI. At this stage, we gain maximum autonomy and quality. We can hand off a task for several hours and receive a high-quality result. Agentic AI systems correct themselves, proactively explore the project context, and support parallelization of tasks. Overall, this is another qualitative step forward in the evolution of AI coding tools. Good examples include agents: Claude Code, Codex, or others non-CLI agents.

Of course, this classification is quite approximate, and the same IDEs can include elements of agentic AI as well. However, the overall pattern of evolution is clear.

Coding CLI agents

I have often used integrated AI in IDEs, specifically Cursor and Copilot in VS Code. What I like most is the flexibility of switching models on the fly while working with code. It is very convenient to compare the new Gemini 3 Pro with Claude 4.5 Opus or ChatGPT 5.1 on real-world tasks. In addition, these tools already provide a certain level of autonomy, as I mentioned earlier, modern IDEs already contain agentic elements.

However, lately I have been using integrated AI in IDE less and less, and there are many reasons for that. One of the main ones is the price. Let's take the Cursor as example, it is extremely expensive, and the issue is not even the price per token, but the way those tokens are spent. Cursor uses tokens in a completely inefficient way. The root of the problem is that its tool calling is rather specific and far from optimal. The second reason is quality. After many comparisons, I have become convinced that the agent and tool calling in Cursor actually reduce the quality of some LLMs.

Essentially, CLI agents are assistants that live in your command line, giving you a flexible way to configure and use AI.

In the end, I can say that CLI agents are better because they are:

- Cheaper, mainly due to more efficient tool calling

- Higher quality, again thanks to more effective tool calling

- More autonomous, much more stable and predictable

- Better at parallelising tasks

- Providing direct terminal access

By the way, in my previous post about AI I compared several models on a practical Business Central task. My conclusion back then was that the then-new Claude 4.5 performed quite poorly. It turns out it was not my imagination. Anthropic actually acknowledged the issues and wrote about them in detail.

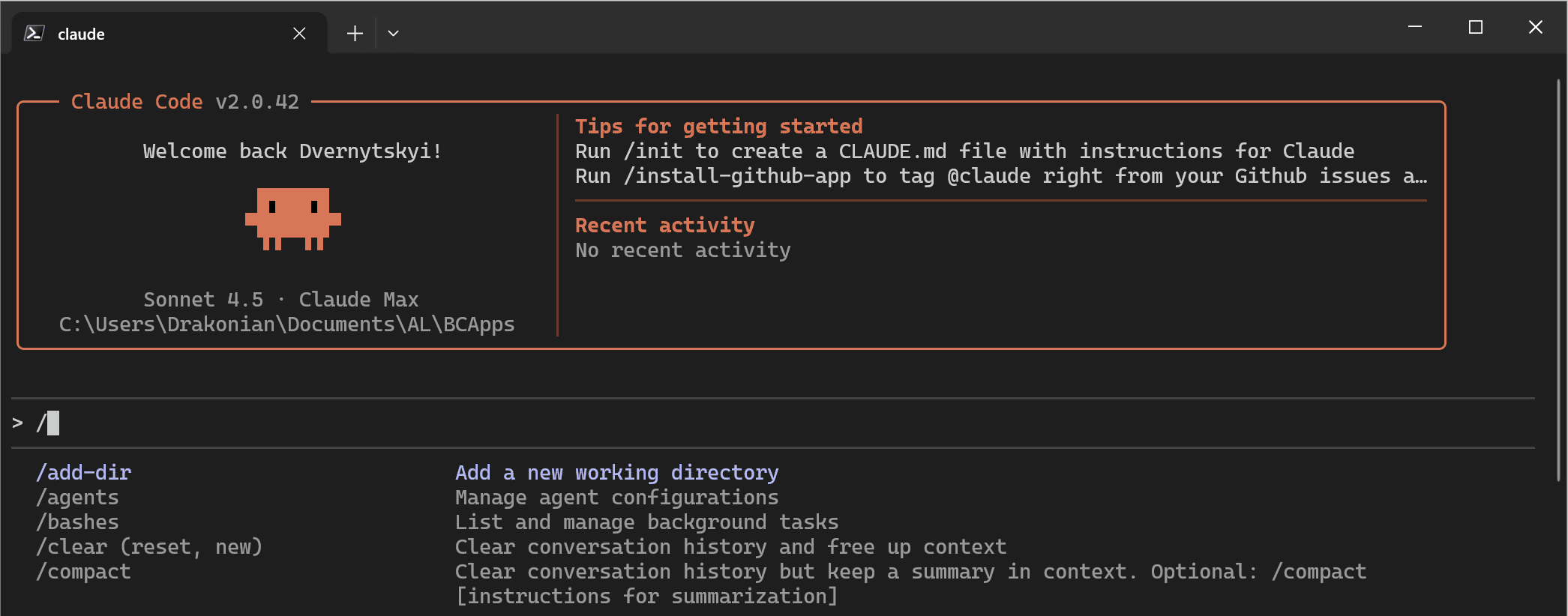

Claude Code

That is why I switched to CLI agents for coding. In my view, these are currently the best AI tools for a developer. And if you think about it, it is logical that the creators of the models themselves build the best agents on top of their own LLMs. Since I consider Anthropic’s models to be the best for coding, I primarily use Claude Code.

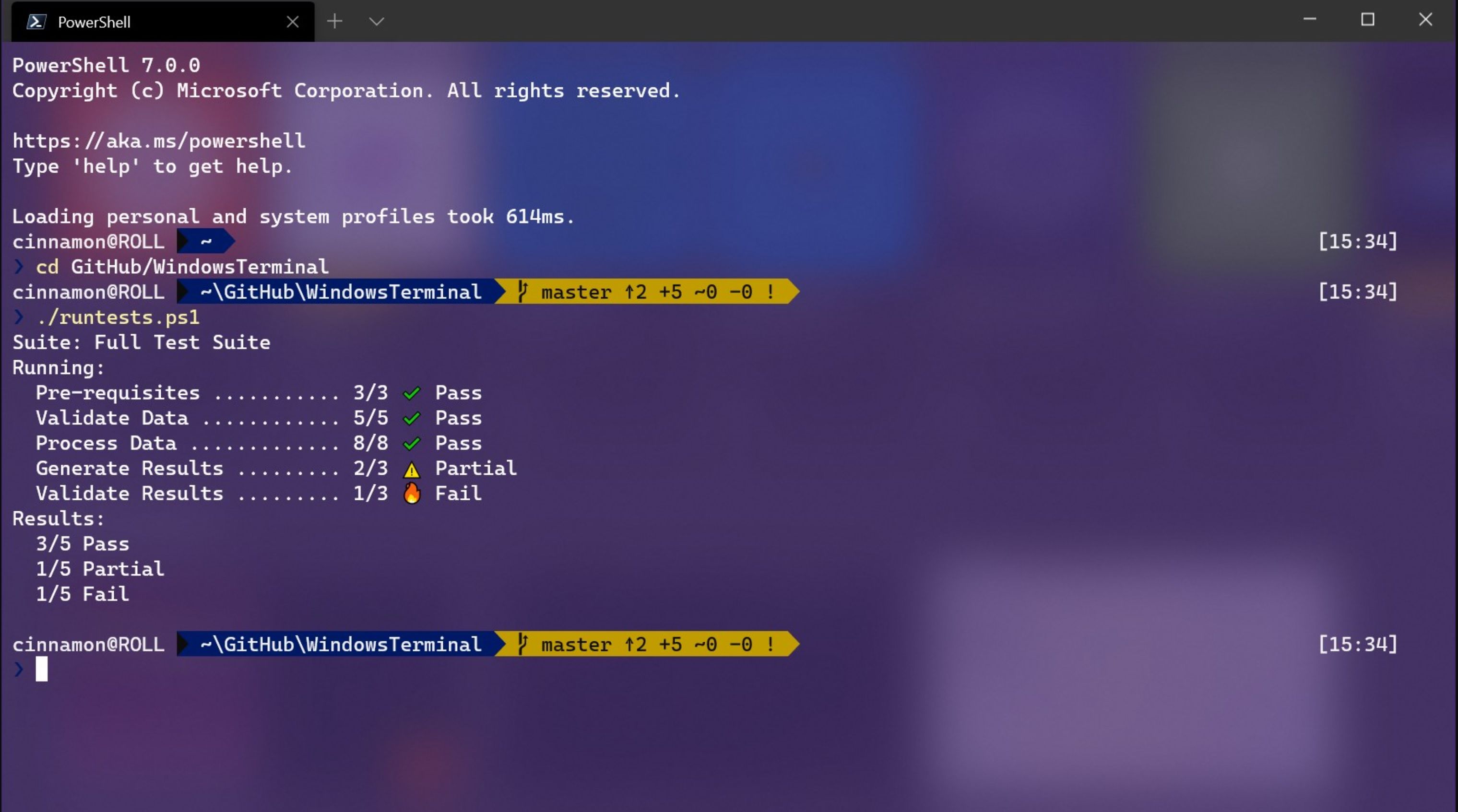

First of all, you need a good console to work with an AI CLI agent.

Like most Business Central developers, I work on Windows, and historically the built-in console has not been great. But things have changed. Microsoft has developed an excellent terminal, Windows Terminal, which is available by default on Windows 11, and on Windows 10 you need to install it manually:

https://apps.microsoft.com/detail/9n0dx20hk701

Next, we need to install Claude Code itself, which is quite easy to do with a single command in the terminal. You can also find more detailed information in the documentation.

irm https://claude.ai/install.ps1 | iex

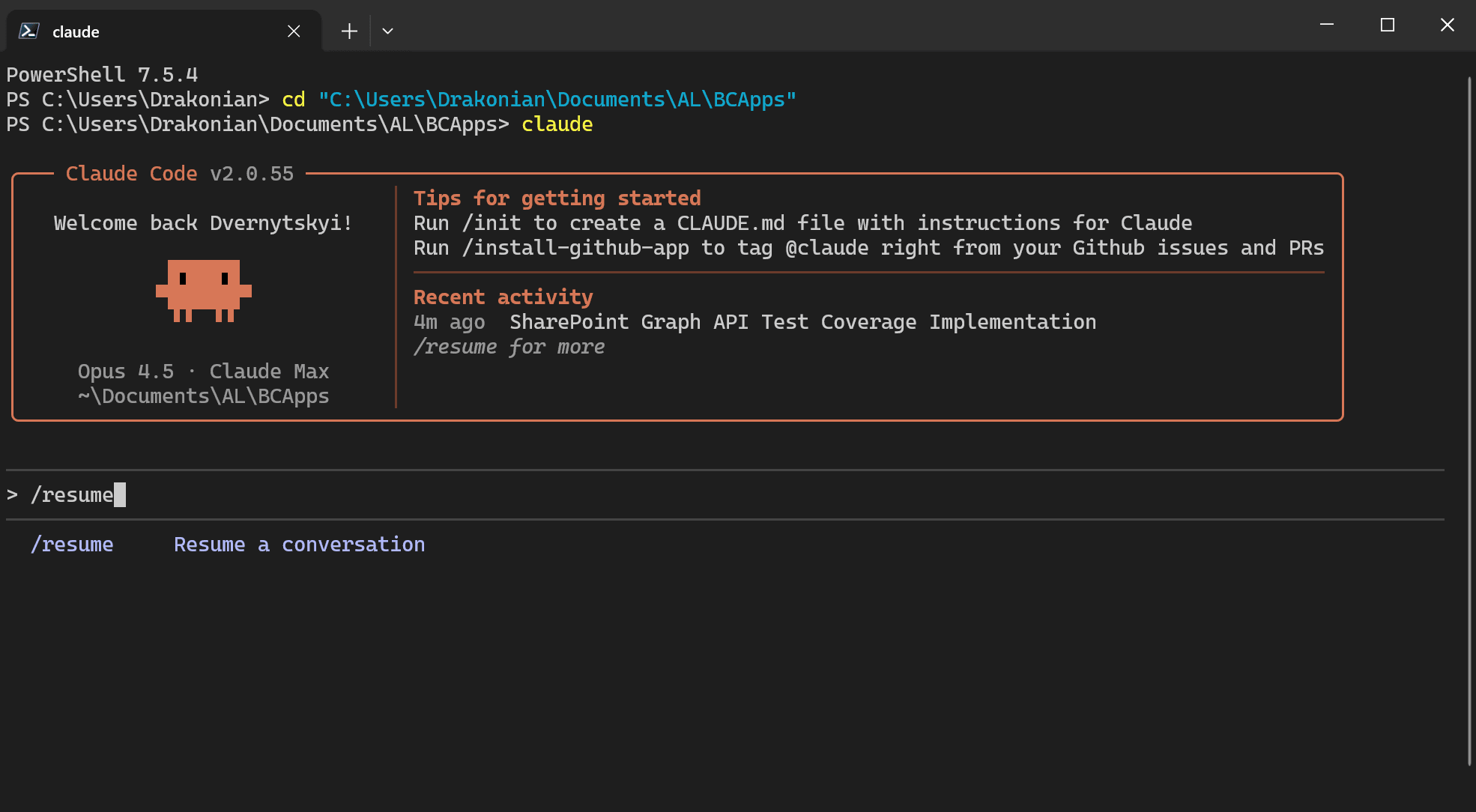

Now let's use our CLI agent. Claude Code stores sessions at the folder level, essentially, one folder corresponds to one project. Therefore, we switch to our project in the terminal, in my case this is:

cd "C:\Users\Drakonian\Documents\AL\BCApps"

After we can start Claude Code session by simple command:

claude

If we want to continue the previous session from this folder, we use the following Claude Code command:

/resume

This is our interface for interacting with the LLM. We simply write our requests directly in the terminal. We can also point to specific files or folders using @ to provide more precise context. I can also recommend using the Ctrl+G shortcut to write your prompt in a notepad editor if it is quite large (although in that case the automatic @ feature will not work).

I may write in more detail about using Claude Code effectively in a future post, as there is a lot to cover, such as what claude.md is and how to configure MCP. For now, let us simply discuss what MCP is and why it is needed, especially for CLI agents.

MCP

MCP is not magic!

I have noticed that some terms and technologies related to AI seem to be wrapped in a kind of haze of mystery, magic, or even shamanism. In reality, however, they are quite simple and understandable once you take the time to study them.

MCP is a protocol for interaction between an LLM and the outside world. It is what allows the model to receive feedback from external sources. In turn, this makes it possible for an AI agent to adjust its actions based on the context and on how the environment reacts to the agent’s previous actions.

This is precisely why using MCP is so important. In essence, it significantly improves and accelerates the work of an AI agent. We no longer need to manually point it to specific data in our requests. AI agent can now examine this data on its own, or even call the necessary actions to external resources by itself.

So, by adding the right MCPs, we gain better autonomy, higher quality, and faster performance.

A good example is the recently released Business Central MCP, which makes it possible to interact with Business Central data through API pages.

Summary

For myself, the main conclusion is simple: the best way to use AI for coding today is through CLI agents with well-configured MCP. Traditional autocomplete and even smart IDE assistants helped a lot at earlier stages, but they are limited by cost, token usage, and how much control they give to the developer.

CLI agents like Claude Code give me exactly what I need: clear, predictable behavior, good autonomy, and the ability to plug in external tools and data sources through MCP. Instead of copying code into a chat window or guessing what the IDE agent is doing with my tokens, I see every command, every tool call, and every result right in the terminal.

For Business Central developers this is especially powerful. We already work heavily with scripts, APIs, and automation, so adding a CLI agent and something like the Business Central MCP feels like a natural next step, not magic. It is just a more effective way to connect an LLM to the tools and data we already use every day.

Next time, we will take a closer look at configuring Claude Code, using and extending MCP, all in the context of Business Central.