Table Data Editor 4.0

Today I have a lot of news: a major update to Table Data Editor, a new logo, a website redesign, and a new domain. These changes took a lot of time and effort, and I hope you'll like them. But let's go over everything in order.

Remember that editing tables directly can be risky, and it’s best to avoid doing so in Production or without a clear understanding of what you’re doing!

Agenda

- Website updates

- Table Data Editor

- Performance

- Flowfields

- Loading Dialog

- Parallel Reading

- Caching mechanism and Refresh

- Automated tests

- CI/CD Github workflows

- Summary

Website updates

So, let me start with the news related to this website. I've noticed that the content I create primarily focuses on Business Central, with less emphasis on Navision. This is no coincidence my work assignments and attention are mainly centered around Business Central. I find myself working less and less with Navision RTC/Classic, and I’m very happy about that. I enjoy working with Business Central and appreciate the direction the product has been developing in recent years.

As a result, the domain vld-nav.com no longer feels very relevant, and I decided it was time for a fresher domain: vld-bc.com. Of course, I plan to maintain the old domain, but from now on, a redirect has been set up from the old domain to the new one: vld-nav.com -> vld-bc.com.

And of course, as you might have noticed, I’ve completely redesigned the website and not just that, it’s running on an entirely new engine. This allows me to work more flexibly with both content and design. For instance, there’s now syntax highlighting for AL code.

One of the most noticeable updates to the site is the addition of a light/dark theme switcher in the header. I’m personally a fan of light themes, both on websites and in IDEs, but I know many people prefer dark themes. So, I decided to add it. To be honest, I’ve grown to like the dark theme, and now I’m not sure if I’ll remain on the side of light or finally walk the path of darkness.

I won’t list all the design updates instead, I invite you to explore them yourself. You can visit the updated pages: Home, Table Data Editor, About Me, and My Apps.

I’d also greatly appreciate any feedback you might have, as one of the main goals of this update is to enhance the experience for readers like you!

Table Data Editor

Let me remind you that Table Data Editor is a free, open-source application for Business Central that allows you to edit, delete, and add data to tables. And this is probably the most significant update I’ve ever made to this application.

Did you know that Data Editor is among the top 5 most-starred repositories of all open-source AL repositories? This reflects your interest and inspires me to continue working on it. And of course, everyone who shares feedback, creates issues, or even contributes to the project plays a huge role in its development thank you for that!

My strategy for working on the application was: start fast and do simple, then build on the idea, which is exactly what I’ve been doing. However, the time has come for more thoughtful development a new level of responsibility. And that’s exactly what we’ll be discussing next.

Let's start with a simple one, Data Editor now has its own unique logo! You can see it in the site header and in Extension Management. The idea is that it will increase the recognizability of the product and make it easier to remember the application itself.

Performance

I occasionally received feedback that Table Data Editor sometimes works very slowly, specifically when it takes a long time to load or update data. While I performed basic optimizations, I didn’t delve too deeply into the issue. Why? Because I often conducted tests on a local Docker sandbox and didn’t notice any major problems.

However, the truth is that Cloud Business Central is much slower than my computer. One of the reasons for this difference is that Cloud Business Central dynamically allocates resources and, to save costs, uses the bare minimum needed for a responsive interface. But this may not be sufficient for handling heavy workloads.

To put things into perspective, 10,000 Customers could take 7 minutes to load! Of course, there is a filtering feature available before loading data, but I noticed that it is often ignored, users load all the data first and then apply filters. But why is there such a heavy load when all we want to do is load data into the Table Data Editor?

The culprit is FlowFields, they are the reason for such performance issues. Just imagine: the Customer table has around 60 FlowFields. For each record, 60 joins need to be calculated. Naturally, this cannot work quickly.

Flowfields

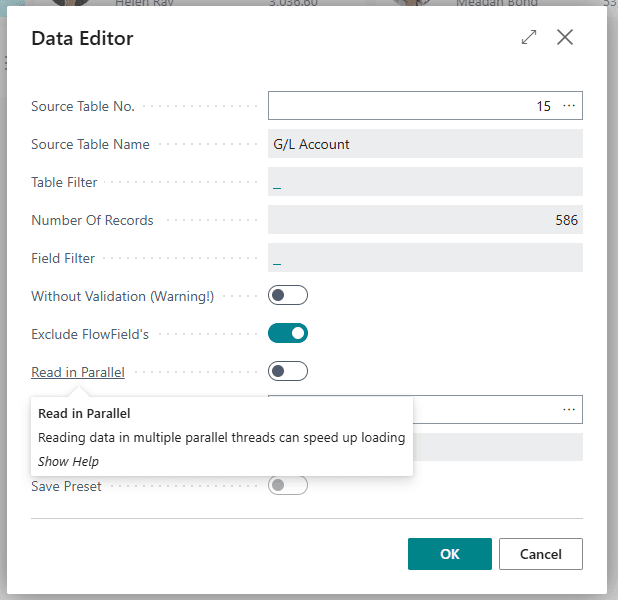

The most obvious optimization, therefore, is to avoid loading FlowFields by default. Now, when opening the Data Editor page, the Exclude FlowFields option will be enabled by default. This will allow the majority of users to achieve maximum performance right out of the box.

trigger OnOpenPage()

begin

ExcludeFlowFields := true; //much better for performance

end;

Loading Dialog

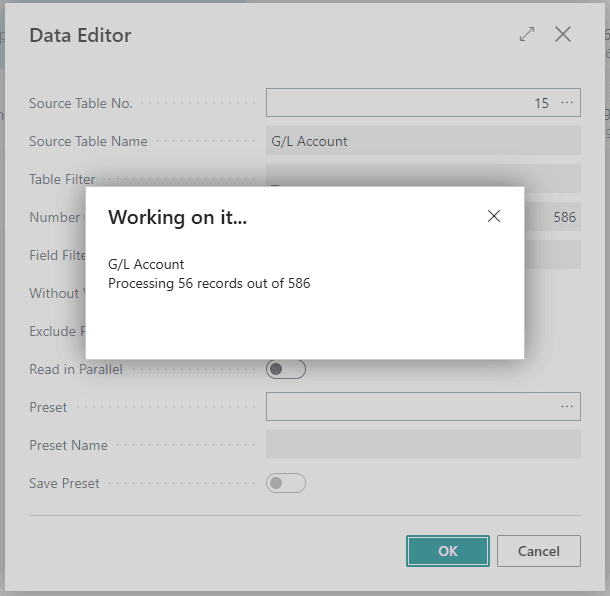

Of course, this doesn’t solve the problem when it’s necessary to load all fields, including FlowFields, or simply load a large number of records. Therefore, the next step was adding a loading dialog. This was something that should have been implemented long ago. How else can you differentiate between a loading process and a system freeze?

The loading dialog improves UI responsiveness and allows the process to be canceled at any time.

Parallel Reading

But how can we speed up the data loading process when the user wants to load a large amount of data or include FlowFields? At first glance, it might seem like there’s not much that can be done. However, there is one solution: parallel loading across multiple threads. This speeds up the loading process proportionally to the number of threads used.

In Business Central, there are several methods for running parallel/background processes:

In our case, for parallel table reading, the most suitable option is StartSession. What makes StartSession particularly interesting is that it is the only method capable of returning data into a temporary table within the current session.

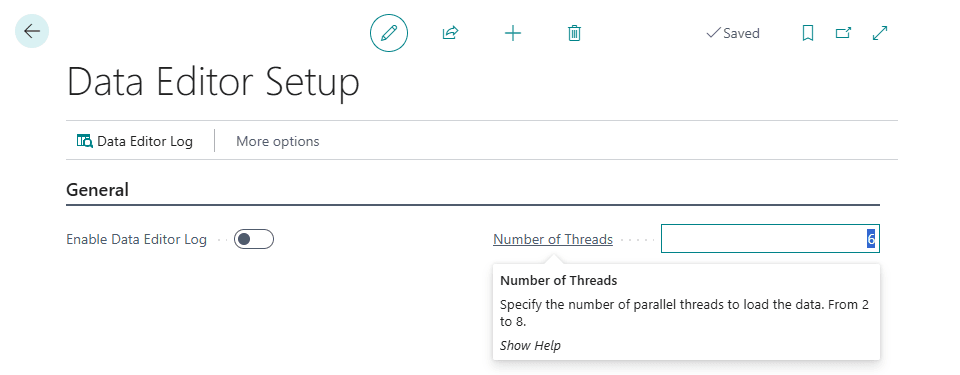

Additionally, it's important to remember that any background tasks come with certain limitations that must be considered. For example, Background Sessions Max Concurrency allows only 10 concurrent sessions, with any additional sessions queued for execution.

As a result, I decided to set the default number of concurrent threads to 6. This value can be adjusted from 2 to 8 in the Data Editor Setup.

By default, parallel reading is disabled, and you need to enable it on the main Data Editor page using the Read In Parallel flag. This functionality should only be activated if you want to speed up the loading of a large amount of data or FlowField's.

In conclusion, if your data previously took about 15 minutes to load, with 6 parallel threads, it will now take approximately 3 minutes!

Here, I want to delve deeper into the technical implementation of parallel table reading. This is a complex and fascinating topic, but to save time, I suggest we at least partially explore how it’s done.

As I mentioned earlier, StartSession is the mechanism being used. Therefore, the code responsible for reading and returning data needs to be moved to a separate codeunit, which in my case is "DET Read Data Batch".

I used a field of type BLOB called Data To Process to pass input parameters to the function. The choice of BLOB was deliberate, as text fields in tables currently have a limit of 2048 characters.

To enable parallel data reading, it’s necessary to avoid conflicts with other parallel reads. For this, I use StartIndex and EndIndex. Each thread is assigned a predefined batch of records to read. I also utilize an interesting property of the Record.Next() function: if you pass a numeric record index to Next(), it will immediately move to that record, based on the current sorting.

RecRef.Next(StartIndex);

Of course, I’m not suggesting writing code this way by default. This property should only be used with caution and a clear understanding of what you’re doing.

To avoid reading unnecessary data, I use:

RecRef.ReadIsolation := RecRef.ReadIsolation::ReadCommitted;

This ensures that only committed data is included in the Data Editor.

Final result:

trigger OnRun()

var

TempBlob: Codeunit "Temp Blob";

DataEditorMgt: Codeunit "DET Data Editor Mgt.";

ConfigProgressBar: Codeunit "Config. Progress Bar";

RecRef: RecordRef;

TempDataEditorBufferRecRef: RecordRef;

FieldRefVar: FieldRef;

FieldRefVar2: FieldRef;

JObject: JsonObject;

JToken: JsonToken;

JObjectAsTxt: Text;

StartIndex, EndIndex, TableNo, Counter, TotalCount, FieldNumber : integer;

IsParallelRun: Boolean;

FilterView: Text;

begin

TempBlob.FromRecord(Rec, Rec.FieldNo("Data To Process"));

JObjectAsTxt := GetTempBlobAsTxt(TempBlob);

JObject.ReadFrom(JObjectAsTxt);

JObject.Get('StartIndex', JToken);

StartIndex := JToken.AsValue().AsInteger();

JObject.Get('EndIndex', JToken);

EndIndex := JToken.AsValue().AsInteger();

JObject.Get('FieldNumbersToRead', JToken);

LoadFieldNoList := DataEditorMgt.ConvertListTextToListInteger(JToken.AsValue().AsText().Split(','));

JObject.Get('IsParallelRun', JToken);

IsParallelRun := JToken.AsValue().AsBoolean();

RecRef.Open(TableNo);

RecRef.SetView(FilterView);

RecRef.ReadIsolation := RecRef.ReadIsolation::ReadCommitted;

TempDataEditorBufferRecRef.GetTable(Rec);

TotalCount := EndIndex - StartIndex + 1;

RecRef.Next(StartIndex);

repeat

Counter := 0;

TempDataEditorBufferRecRef.Init();

FieldRefVar2 := TempDataEditorBufferRecRef.FieldIndex(1);

FieldRefVar2.Value(StartIndex);

FieldRefVar2 := TempDataEditorBufferRecRef.FieldIndex(2);

FieldRefVar2.Value(RecRef.RecordId());

foreach FieldNumber in LoadFieldNoList do begin

Counter += 1;

FieldRefVar := RecRef.Field(FieldNumber);

//Performance bottleneck

if FieldRefVar.Class() = FieldClass::FlowField then

FieldRefVar.CalcField();

FieldRefVar2 := TempDataEditorBufferRecRef.FieldIndex(Counter + 2);

FieldRefVar2.Value(FieldRefVar.Value());

end;

TempDataEditorBufferRecRef.Insert();

StartIndex += 1;

until (RecRef.Next() = 0) or (StartIndex > EndIndex);

end;

This is, of course, only part of the solution. We also need to define a mechanism for launching this Codeunit and collecting the returned information. Since I didn’t want to overcomplicate the code or write separate procedures for background and foreground execution, I created an intermediate function. This function runs the object in either background or foreground mode, depending on the parameters provided.

procedure ReadRecord(var TempDataEditorBuffer: Record "DET Data Editor Buffer" temporary; JObject: JsonObject; NumberOfThreads: Integer; TotalRecordCount: Integer) ActiveSessionId: Integer

var

OutStreamToProcess: OutStream;

begin

if not TempDataEditorBuffer.Get(0) then begin

TempDataEditorBuffer.Init();

TempDataEditorBuffer."Entry No." := 0;

TempDataEditorBuffer."Data To Process".CreateOutStream(OutStreamToProcess);

JObject.WriteTo(OutStreamToProcess);

TempDataEditorBuffer.Insert();

end else begin

TempDataEditorBuffer."Data To Process".CreateOutStream(OutStreamToProcess);

JObject.WriteTo(OutStreamToProcess);

TempDataEditorBuffer.Modify();

end;

TempDataEditorBuffer.CalcFields("Data To Process");

//There is no point to run process in background session if there is only 1 thread or small amount of records

if (NumberOfThreads > 1) and (TotalRecordCount > 500) then

StartSession(ActiveSessionId, Codeunit::"DET Read Data Batch", CompanyName(), TempDataEditorBuffer)

else

Codeunit.Run(Codeunit::"DET Read Data Batch", TempDataEditorBuffer);

end;

To implement the execution mechanism, I first determine the total number of records that match the filters to calculate the StartIndex and EndIndex for each thread. Then, this information is sent to the DET Read Data Batch codeunit, and the process waits for a response in the temporary table using Sleep().

However, there are a couple of interesting points to note:

- We cannot pass this.Rec (the current record instance from the page) to the codeunit because multiple parallel threads would conflict with each other. This would make the process unstable, and you might occasionally encounter one of the most dreaded errors in Business Central: "Something went wrong."

- We also cannot use an Array of Record (temporary) to receive the response. Why? Because there’s a very peculiar oddity, which I personally consider to be absolute evil, I’ve even discussed it before in Let’s Break Down the Business Central. This is actually a documented feature, which explicitly states that an array of temporary records will always retain the context of the previous entry. No matter what you do, this behavior persists. I believe this is simply a result of legacy code.

Considering all of the above, we’ll need to create separate Record temporary variables for each thread and then merge the results from them into this.Rec.

local procedure ReadRecord(NumberOfThreads: Integer)

var

TempDataEditorBuffer1: Record "DET Data Editor Buffer" temporary;

TempDataEditorBuffer2: Record "DET Data Editor Buffer" temporary;

TempDataEditorBuffer3: Record "DET Data Editor Buffer" temporary;

TempDataEditorBuffer4: Record "DET Data Editor Buffer" temporary;

TempDataEditorBuffer5: Record "DET Data Editor Buffer" temporary;

TempDataEditorBuffer6: Record "DET Data Editor Buffer" temporary;

TempDataEditorBuffer7: Record "DET Data Editor Buffer" temporary;

TempDataEditorBuffer8: Record "DET Data Editor Buffer" temporary;

DataEditorMgt: Codeunit "DET Data Editor Mgt.";

ConfigProgressBar: Codeunit "Config. Progress Bar";

TempRecRef: RecordRef;

StartIndex, EndIndex, i, PartSize, Remainder : Integer;

JObject: JsonObject;

IsParallelRun: Boolean;

ActiveSessionList: List of [Integer];

ActiveSessionListCopy: List of [Integer];

OutStreamToProcess: OutStream;

TotalRecordCount, SleepTime : Integer;

ActiveSessionId: Integer;

begin

if InitLoadDateTime = 0DT then

InitLoadDateTime := CurrentDateTime();

TempRecRef.GetTable(Rec);

if CustomTableView <> '' then

RecRef.SetView(CustomTableView);

RecRef.ReadIsolation := RecRef.ReadIsolation::ReadCommitted;

TotalRecordCount := RecRef.Count();

if TotalRecordCount = 0 then

exit;

IsParallelRun := (NumberOfThreads > 1) and (TotalRecordCount > 500);

if not IsParallelRun then

NumberOfThreads := 1;

if GuiAllowed() and IsParallelRun then

ConfigProgressBar.Init(TotalRecordCount, 1, RecRef.Caption());

SleepTime := 1000;

PartSize := TotalRecordCount div NumberOfThreads;

Remainder := TotalRecordCount mod NumberOfThreads;

StartIndex := 1;

for i := 1 to NumberOfThreads do begin

EndIndex := StartIndex + PartSize - 1;

if Remainder > 0 then begin

EndIndex += 1;

Remainder -= 1;

end;

Clear(ActiveSessionId);

Clear(OutStreamToProcess);

Clear(JObject);

JObject.Add('StartIndex', StartIndex);

JObject.Add('EndIndex', EndIndex);

JObject.Add('FilterView', CustomTableView);

JObject.Add('TableNo', RecRef.Number());

JObject.Add('IsParallelRun', IsParallelRun);

JObject.Add('FieldNumbersToRead', DataEditorMgt.ConvertIntegerListToText(LoadFieldNoList, ','));

case i of

1:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer1, JObject, NumberOfThreads, TotalRecordCount);

2:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer2, JObject, NumberOfThreads, TotalRecordCount);

3:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer3, JObject, NumberOfThreads, TotalRecordCount);

4:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer4, JObject, NumberOfThreads, TotalRecordCount);

5:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer5, JObject, NumberOfThreads, TotalRecordCount);

6:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer6, JObject, NumberOfThreads, TotalRecordCount);

7:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer7, JObject, NumberOfThreads, TotalRecordCount);

8:

ActiveSessionId := DataEditorMgt.ReadRecord(TempDataEditorBuffer8, JObject, NumberOfThreads, TotalRecordCount);

end;

if ActiveSessionId <> 0 then

ActiveSessionList.Add(ActiveSessionId);

StartIndex := EndIndex + 1;

end;

while ActiveSessionList.Count() > 0 do begin

ActiveSessionListCopy := ActiveSessionList.GetRange(1, ActiveSessionList.Count());

foreach ActiveSessionId in ActiveSessionListCopy do

if IsSessionActive(ActiveSessionId) then begin

Sleep(SleepTime);

if GuiAllowed() then

ConfigProgressBar.UpdateCount(ProcessingLbl, 1);

end else

ActiveSessionList.Remove(ActiveSessionId);

end;

DataEditorMgt.MergeBufferData(Rec, TempDataEditorBuffer1, TempDataEditorBuffer2, TempDataEditorBuffer3,

TempDataEditorBuffer4, TempDataEditorBuffer5, TempDataEditorBuffer6, TempDataEditorBuffer7, TempDataEditorBuffer8);

if GuiAllowed() and IsParallelRun then

ConfigProgressBar.Close();

if not Rec.IsEmpty() then

Rec.FindFirst();

end;

Caching mechanism and Refresh

The Refresh mechanism has also been completely reworked. Previously, any change in data within the Table Data Editor would trigger a full reload of all data, which is obviously far from optimal.

The most straightforward solution is to implement a caching mechanism, which essentially already exists in the current instance of the page’s temporary variable. All we need to do is update only the subset of data that has changed since the last load or refresh!

This is fairly easy to implement using the system field SystemModifiedAt, which is automatically updated whenever data in the table is modified.

It’s worth noting that there is one scenario this approach doesn’t address: deleted records in the database. The Refresh will not remove them from the cache; they will remain cached. If you attempt to modify or delete such records via the Data Editor, you will encounter a runtime error stating that the record no longer exists in Business Central.

This is not a critical issue, and solving it doesn’t seem feasible at first glance. After all, we don’t want to iterate through every record, as that would effectively undermine the entire caching mechanism. Thus, the simplest solution is to leave such records in the cache.

local procedure RefreshData()

var

ConfigProgressBar: Codeunit "Config. Progress Bar";

TempDataEditorBufferRecRef: RecordRef;

LocalRecRef: RecordRef;

SystemModifiedAtFieldRef: FieldRef;

LocalFieldRefVar: FieldRef;

TempDataEditorBufferFieldRefVar: FieldRef;

IsRecordCached: Boolean;

FieldNumber: Integer;

Counter: Integer;

LastEntryNo: Integer;

PrevView: Text;

begin

LocalRecRef.Open(RecRef.Number());

if CustomTableView <> '' then

LocalRecRef.SetView(CustomTableView);

LocalRecRef.ReadIsolation := RecRef.ReadIsolation::ReadCommitted;

TempDataEditorBufferRecRef.GetTable(Rec);

TempDataEditorBufferRecRef.FilterGroup(10);

SystemModifiedAtFieldRef := LocalRecRef.Field(LocalRecRef.SystemModifiedAtNo());

SystemModifiedAtFieldRef.SetFilter('>=%1', InitLoadDateTime);

if LocalRecRef.FindSet() then begin

PrevView := Rec.GetView();

Rec.Reset();

if Rec.FindLast() then

LastEntryNo := Rec."Entry No.";

if GuiAllowed() then

ConfigProgressBar.Init(LocalRecRef.Count(), 1, LocalRecRef.Caption());

repeat

Counter := 0;

TempDataEditorBufferFieldRefVar := TempDataEditorBufferRecRef.Field(Rec.FieldNo("Source Record ID"));

TempDataEditorBufferFieldRefVar.SetRange(LocalRecRef.RecordId());

IsRecordCached := TempDataEditorBufferRecRef.FindFirst();

if not IsRecordCached then begin

LastEntryNo += 1;

TempDataEditorBufferRecRef.Init();

TempDataEditorBufferFieldRefVar := TempDataEditorBufferRecRef.FieldIndex(1);

TempDataEditorBufferFieldRefVar.Value(LastEntryNo);

TempDataEditorBufferFieldRefVar := TempDataEditorBufferRecRef.FieldIndex(2);

TempDataEditorBufferFieldRefVar.Value(LocalRecRef.RecordId());

end;

foreach FieldNumber in LoadFieldNoList do begin

Counter += 1;

LocalFieldRefVar := LocalRecRef.Field(FieldNumber);

//Performance bottleneck

if LocalFieldRefVar.Class() = FieldClass::FlowField then

LocalFieldRefVar.CalcField();

TempDataEditorBufferFieldRefVar := TempDataEditorBufferRecRef.FieldIndex(Counter + 2);

TempDataEditorBufferFieldRefVar.Value(LocalFieldRefVar.Value());

end;

if IsRecordCached then

TempDataEditorBufferRecRef.Modify()

else

TempDataEditorBufferRecRef.Insert();

if GuiAllowed() then

ConfigProgressBar.UpdateCount(ProcessingLbl, 1);

until LocalRecRef.Next() = 0;

InitLoadDateTime := CurrentDateTime();

if GuiAllowed() then

ConfigProgressBar.Close();

Rec.SetView(PrevView);

if Rec.FindFirst() then;

end;

end;

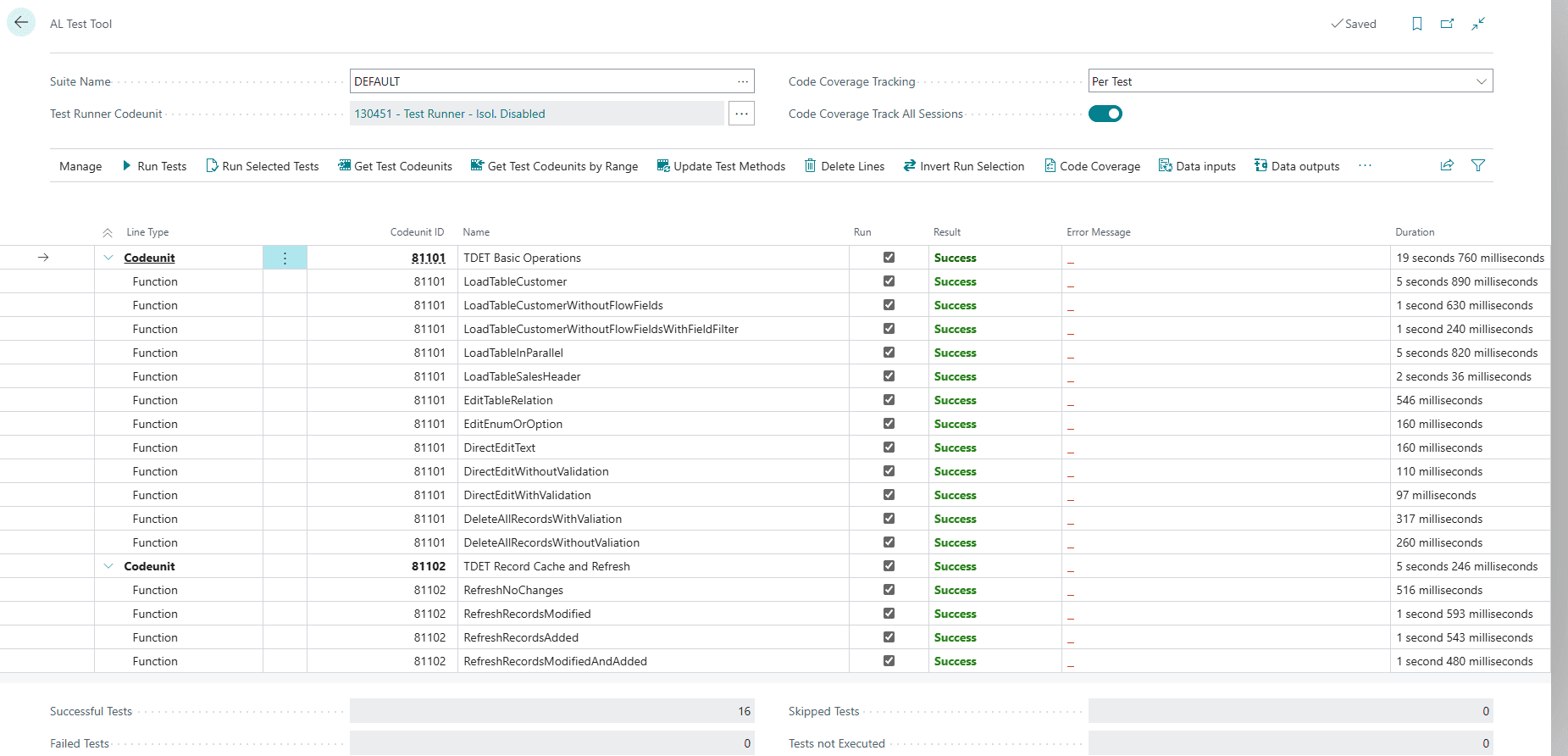

Automated tests

Every product requires testing; bugs are an inevitable part of development. Of course, there are ways to reduce their number, and one of them is automated testing. I’m confident this has been long overdue for Table Data Editor, and I have good news for you.

I finally found the time to write some basic tests. Here’s an example of one of them:

[Test]

[TransactionModel(TransactionModel::AutoRollback)]

procedure LoadTableCustomer()

var

Customer: Record Customer;

DataEditorBufferTestMode: Codeunit "TDET DE Buffer Test Mode";

DataEditor: TestPage "DET Data Editor";

DataEditorBuffer: TestPage "DET Data Editor Buffer";

ListOfRecorIds: List of [RecordId];

FieldNoFilter: List of [Integer];

RecordsToGenerate: Integer;

i: Integer;

begin

Init();

Customer.DeleteAll();

RecordsToGenerate := LibraryRandom.RandInt(100);

for i := 1 to RecordsToGenerate do begin

LibrarySales.CreateCustomer(Customer);

ListOfRecorIds.Add(Customer.RecordId());

end;

DataEditorBuffer.Trap();

DataEditor.OpenEdit();

DataEditor.SourceTableNoField.SetValue(Database::Customer);

DataEditor.ExcludeFlowFieldsField.SetValue(false);

BindSubscription(DataEditorBufferTestMode);

DataEditor.OK().Invoke();

UnbindSubscription(DataEditorBufferTestMode);

Assert.AreEqual(Customer.TableCaption(), DataEditorBuffer.Caption(), '');

LibraryDataEditor.VerifyBufferFieldsWithSourceRecord(DataEditorBuffer, ListOfRecorIds, FieldNoFilter, false);

end;

The current set of tests is not complete, and I would greatly appreciate contributions from anyone who can spare the time to help with testing.

CI/CD github workflows

Finally, the implementation of CI/CD in the Table Data Editor project, specifically the CI part, is something I’m announcing slightly ahead of its actual implementation. It will be activated in the near future.

For every code change, a Pull Request will be created, triggering compilation and the automatic execution of all tests.

Summary

I want to emphasize that the development of Table Data Editor doesn’t stop here, this is just the first significant step in its evolution. I’ve put a lot of effort into implementing all these changes, and I truly hope you’ll like them. Of course, I’d be delighted to hear any feedback you might have. Well-thought-out feedback enables rapid progress. See you in future posts!